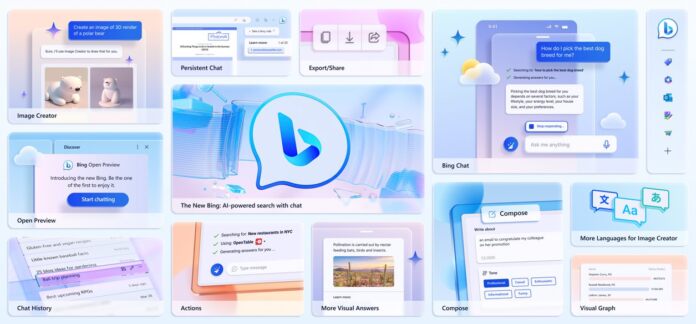

Bing Chat, Microsoft’s AI search chatbot, has been facing quality issues in recent weeks. Windows Latest reports users have reported that the chatbot is often unhelpful, avoiding questions or failing to provide relevant answers.

On Reddit, users have been complaining about the waning quality of Bing Chat, which is powered by an adapted version of OpenAI´s GPT-4 large language model (LLM). Reports range from the AI arguing, providing misinformation, doubling down on inaccuracies, and ending conversations when told about mistakes.

In a statement to Windows Latest, Microsoft acknowledged that Bing Chat has been facing quality issues. The company said that it is aware of recent user feedback and that it plans to make changes to address the issues in the near future.

“Compose mode used to feel less restricted than chat and now it’s barely usable. I prefer spending the extra time buttering up Bing in chat to help with writing rather than wasting time getting these weird excuses in compose mode. Almost stopped using compose all together because it wastes so much time to retry the prompts over and over,” one Reddit user said.

Is Bing Chat Losing Ground on Google Bard and ChatGPT?

While it is completely anecdotal, my general experience with Bing Chat matches the growing number of complaint from users. I have seen the chatbot become gradually worse to the point some days it is unusable:

- Incorrect Information: If I provide Bing Chat with a Wikipedia page and ask it to provide a summary, the AI gives information unrelated to the source, gets small details wrong, and makes up quotes.

- Doubles Down on Mistakes: When I flag these mistakes to Bing Chat, the bot sometimes argues about them and tries to justify providing misinformation.

- Mixing up sources: These arguments often revolve around Bing then starting to think its written summary is the original source. For example, it will argue about quotes and claim they are original even though it made them up, giving dead links in an attempt to prove itself.

- Ending conversations: If I push even a little bit and ask why Bing Chat thinks it may be struggling on a specific task, the AI will instantly end the conversation.

It is worth noting that this does not happen every time and there seems to be some days when Bing is worse than others. Even so, the AI’s ability to spread misinformation and then stand behind it is a concern. Users could easily assume Bing’s fake news and quotes are real. Of course, Microsoft warns users the chatbot may not be accurate and that it remains a preview tool.

Needless to say, this itself is a problem. If the bot can be incorrect and often is, what is the point of it? Microsoft positions Bing Chat as a search tool. However, if the results the chatbot provides cannot be trusted, it fails as a search companion.

Other Chatbots and the Problem with Inaccuracies

Let’s be clear, Google Bard and ChatGPT can also deliver similar problems as Bing. However, users and indeed my own experience suggest that Bing is worse. In fact, it is becoming a very frustrating and almost unusable tool.

This is the same AI model that powers ChatGPT. In fact, Bing has been ahead of ChatGPT, which only upgraded to GPT-4 earlier this month. Google Bard is the other major mainstream chatbot and while it is generally more basic than Bing Chat, it seems to deliver more consistent information and certainly gets confused much less.

A recent study has found that ChatGPT, the large language model chatbot from OpenAI, may be getting worse over time. The study, conducted by researchers at Stanford and UC Berkeley, found that ChatGPT’s accuracy on a variety of tasks declined significantly between March and June 2023.

The study compared ChatGPT’s performance on tasks in March and June. They discovered that ChatGPT’s skills deteriorated over time. On average, ChatGPT’s accuracy dropped by 25% in three months. Moreover, ChatGPT’s performance became more unstable in June. For instance, ChatGPT’s accuracy on math problems varied from 2.4% to 97.6% in June, while it was between 87.5% and 97.6% in March.