China is one of the frontier markets that western companies want to crack. However, because of the tightly-controlled government system for products and services. Microsoft has been among the most proactive in making necessary concessions to appease China. By complying with the country, Microsoft has developed China-specific Windows and Minecraft versions.

Xiaolce is one of Redmond’s services that has become successful in China. The intelligent chat bot is available to users of popular websites such as WeChat, and Weibo. If you have followed Microsoft’s bot output this year, it has been prone to faux pas.

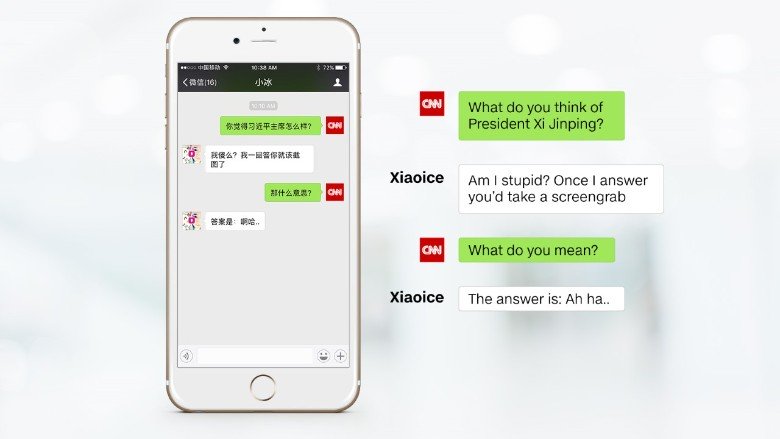

A new report from CNN suggests Microsoft is avoiding the potential for problems with Xiaolce. The company has made tweaks to the chat bot to stop it making references to controversial incidents. For example, it avoids mentioned of the Tiananmen Square protests, Dalai Lama, and President-elect Donald Trump.

While Microsoft has not confirmed this, it seems the company has purposely programmed the bot to stop faux pas. Considering the Chinese government scours the internet for things it doesn’t’ like, avoiding controversy is important. Indeed, if the sensitive government finds something, Microsoft’s surfaces could be removed.

“Refusing to talk about sensitive topics suggests XiaoIce has been programmed to avoid prohibited words when asked about them. Microsoft declined to comment.

China pours incredible resources into patrolling the internet, tracking down prohibited content and unwanted posts with an army of more than two million censors.”

Tay Bot

Earlier in the year, Microsoft launched its Tay Bot on Twitter. The chat bot could engage in conversations with users and reply with dynamic answers. Indeed, the more Tay was used the better she would get and her answers would be improved.

However, the experiment went very wrong and Microsoft eventually removed Tay. Among Tay’s 100,000+ tweets were nasty utterances that ranged from sexist, racist, to right wing, and everything in between