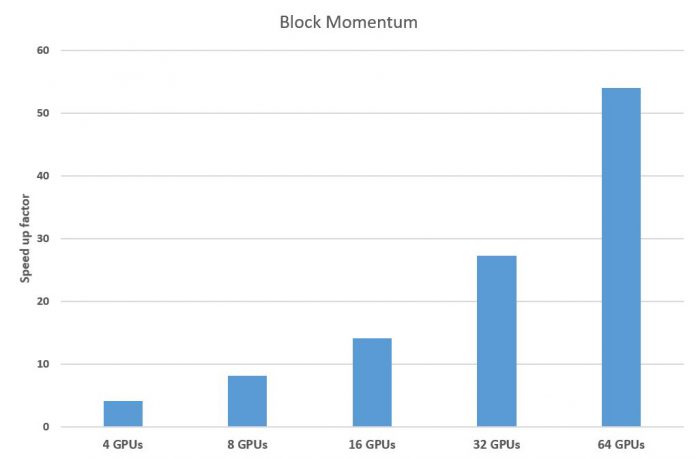

With CNTK 1.5, Microsoft is bringing a parallel processing technique called Block Momentum that improves scalability across multiple GPUs and machines, while maintaining accuracy. It offers better performance and is paired with an improved network description language called BrainScript.

As noted by Frank Seide, principal researcher and one of the architects of CNTK, “Expressing very deep nets, beam decoding, and other complex structures is greatly simplified with BrainScript.” It allows developers to program CNTK easily, and supports “infix operators, nested variables, function definitions, recursive function calls, arrays, and even lambdas.”

Improved I/O Architecture and Toolbox Options

CNTK 1.5 also includes an upgraded I/O architecture, which brings more flexible readers for text and speech and makes it easier to input popular formats for deep learning. It saves developers from the time and effort required to write their own code to parse such formats.

Microsoft has also expanded CNTK's library of standard components with Deep Residual Nets for Image Recognition and Sequence-to-Sequence with Attention.

These features expand the toolbox available to CNTK users and give developers access to advanced tools to add AI capabilities to their applications.

CNTK toolkit was first hosted on Microsoft's own CodePlex site and offered under a restrictive academic license. However, the company later moved the project to GitHub under an open source license, making it easier for developers to build their own deep learning apps.

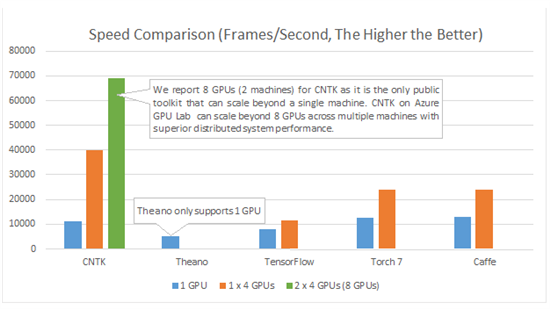

Removing license restrictions has allowed developers and corporations to utilize CNTK toolkit in efficient ways. It can scale beyond a single machine, and performs better than other deep learning tools such as Theano, Caffe, Touch 7, and TensorFlow.

CNTK 1.5 is available on GitHub for download.